Red Sun | A multisensory experience chamber with VR, ambient sound and scent

May 11, 2019

Embodied interaction design

with AI live-tracking of multiple users

User Experience Case Study

OVERVIEW

Sometimes, as a designer you are presented with an exceptionally exciting and challenging scenario:

The developers of a piece of new tech have not yet found what to use it for and need a use case design, because there is nothing quite like it on the market yet.

Such was the situation when the artificial intelligence (AI) company Grazper and I explored a collaboration in 2023.

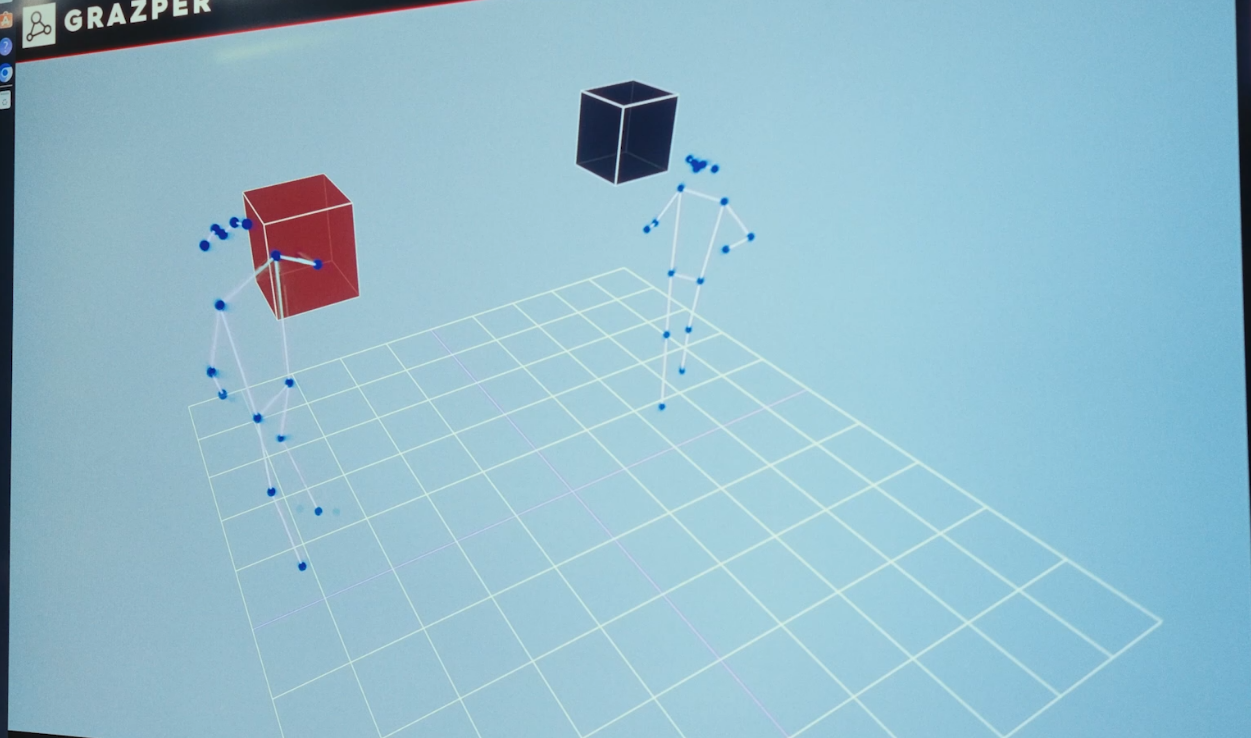

The company does 3D-visualizations using AI: tracking up to 5 people at a time via video cameras placed around a large floor space, which the AI-system then translates to anonymized skeletal rigs showing real-time movements of users.

This article is an overview of my work.

DESIGN BRIEF

Create a use case for the AI technology, to accurately

• demonstrate its features

• wow audiences at tech fairs in order to win new customers or potential partners

MY ROLES

• PRODUCT DESIGN

• UX DESIGN

• ART DIRECTION

• CONCEPT DEVELOPMENT

• PRODUCTION ROADMAP incl. project pipeline in Unreal Engine, production plan for physical installation, team roles, timeline, budget, recruiting plan.

Live video-tracking of users is translated to virtual skeletons

RESEARCH

When considering new technologies, without known use cases to draw from, my first step is always to gather information.

After a dive into how the technology works, learning more about the stakeholders and getting a good understanding of the features and limitations of the system, I uncovered the constraints.

This led to further research and brainstorming embodied interaction design ideas.

CONSTRAINTS

● New technology with unproven market potential and undefined users

● Live-conversion to 3D skeletons of max 5 tracked users

● Show positional tracking (sets it apart from competitors)

● Tracking limited to 30 skeletal joints per individual

● No tracking of fingers or mimicry

● No eye-tracking, but tracking head orientation possible

● Must be intuitive to interact with and to learn to use

● Users should not require tutorial or presence of staff

● Should be viewable by many people at the same time

● Impress, entertain and intrigue users as well as onlookers

● Should work regardless of age group or user background

● A design that can be developed and implemented within 3 months

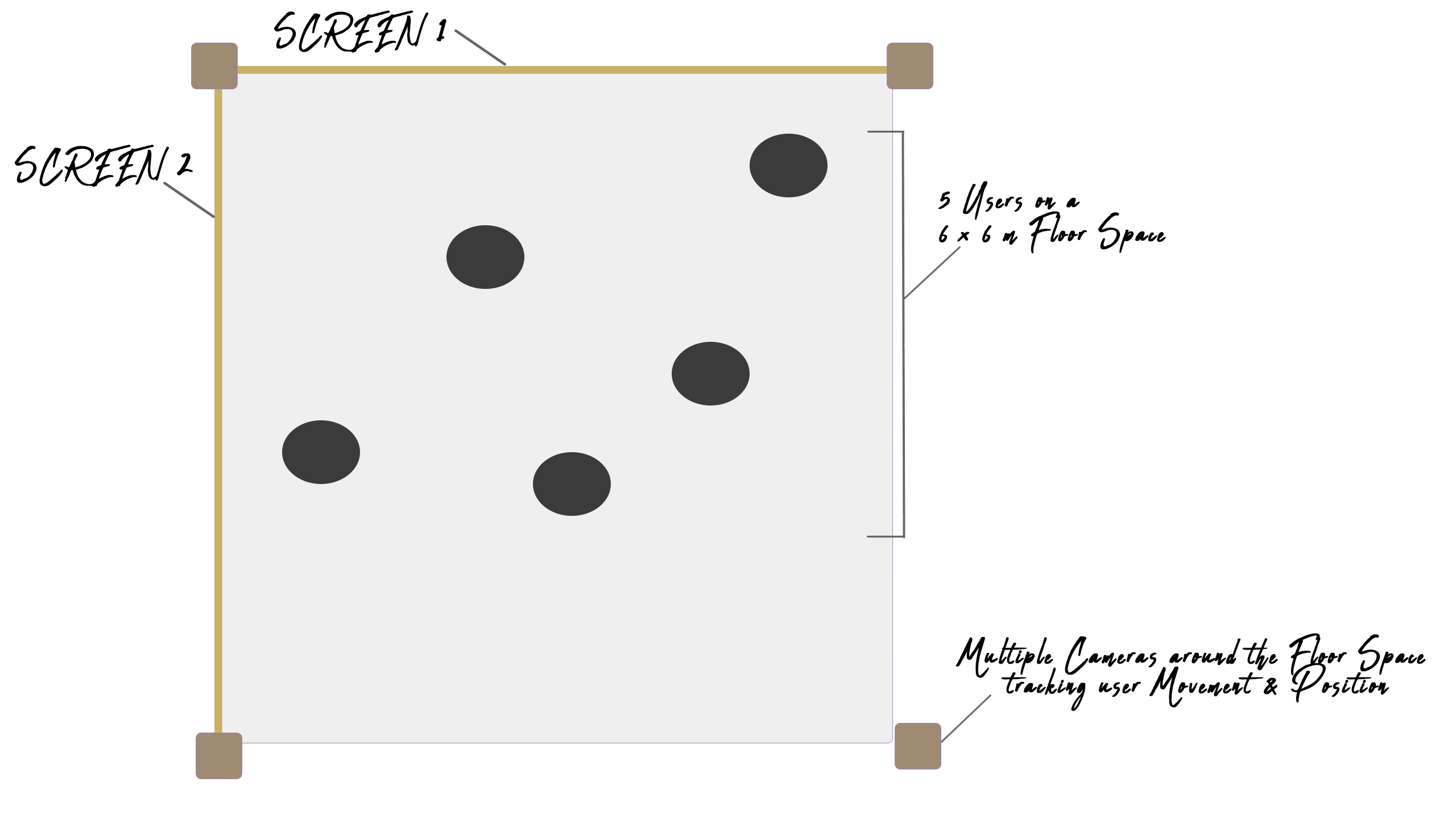

● Preliminary target setting: 6x6m booth at public tech event

ADDRESSING THE CONSTRAINTS

Target setting: 6x6m booth at public tech event

Should impress and intrigue users and onlookers, selling the capabilities of the system

Demonstrate the capabilities of the AI system: live positional tracking of multiple users

Showing positional tracking can be achieved via 2 screens placed in a convex angle either side by side or one above the other.

New tech with unproven market and undefined users

Top-down view of the design of the 6x6m physical setup after considering the constraints

Based on these deductions, I was able to formulate 4 different use cases, out of which Fireflies was chosen as the project going forward.

Below is a showcase of the Fireflies concept with a quick overview of the intended experience, the aesthetic design, mood board and concept art.

Notes on the technical implementation and a preliminary prototype of the virtual environment in Unreal Engine are presented at the end.

CONCEPT SHOWCASE

Fireflies

Imagine that a large, 6m wide screen in front of you

is actually a glass window to an old, peaceful forest.

On the other side are supernatural creatures

made of shining dust, floating in the air idly,

scattered like fireflies.

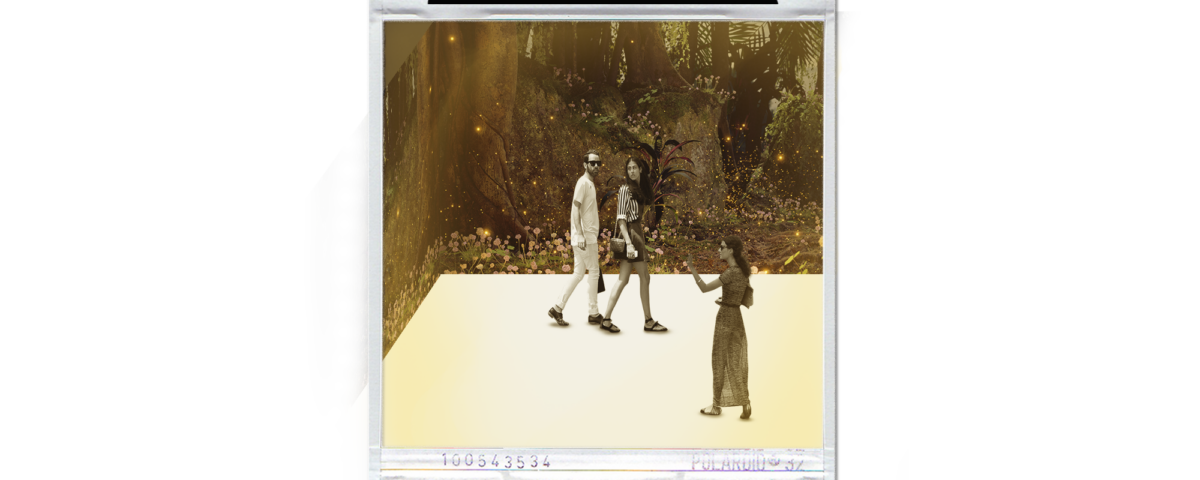

Reference images for the environment on the screens

As you come nearer to the "glass barrier",

the Fireflies mimic your movements

and turn into humanoid shapes:

lifting their limbs as you do,

walking as you walk,

turning as you turn.

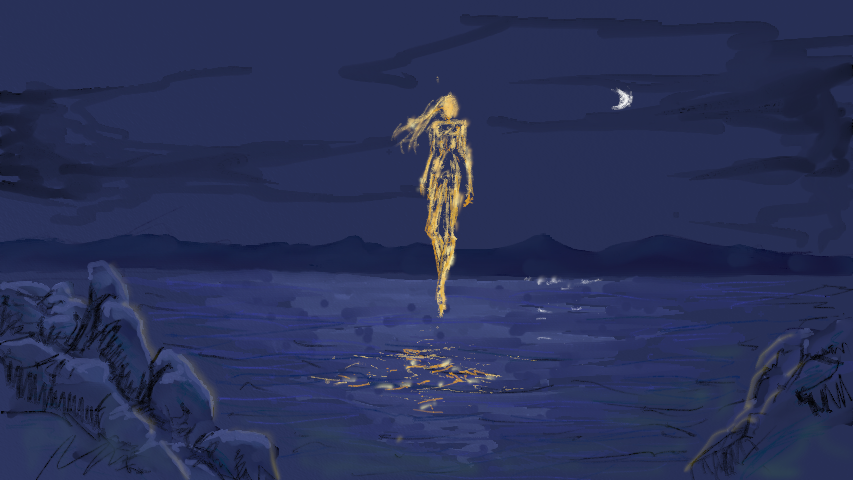

Reference images for the Fireflies

...But they also have "free will" and agency:

making eye-contact and smiling at you,

reacting to how quickly and slowly you move,

looking over their shoulder,

getting distracted by their environment.

Concept Art with Firefly creature

USE CASE DESIGN

"Fireflies" is one of many possible visual concepts in the use case design, which in itself is an interactive, physical installation using 2 or more large screens for single or multiple users. The screens are 6m wide, placed in a convex angle to form a corner, horizontally or vertically.

On a 6x6m floor space in front of the screens, up to 5 users at a time can interact with a real-time virtual environment running on Unreal Engine.

Unreal Engine is a game engine also used for high budget film productions, and was chosen due to its ability to generate real-time, high quality 3D visuals.

The tracked persons are translated to on-screen avatars, which are not 1:1 representations of the users, but instead represent autonomous creatures "meeting" the users. The illusion is that the screen is actually transparent glass, and on the other side are living, supernatural beings who can “choose” to interact with the users by mimicking their movements, gestures and position. However, they can also break out of mimicry and move, express and act seemingly autonomously.

This "autonomy" is important, as it is used to mask any eventual tracking problems and glitches in the real-time interaction.

The Firefly Creatures are "awakened" when a user facing the screen enters the floor space and thus triggers the interaction.

Screen setup for demonstrating positioning: 2 screens placed in an angle

VISUAL CONCEPT

The original aesthetic setting in the design concept is a forest floor with particle effects resembling fireflies floating in the air. These fireflies can gather in concentration to create different shapes, to illustrate the supernatural creatures mimicking the users - in this case humanoid Firefly Creatures.

However, the setting itself and the visualization of the creatures is deliberately designed to be interchangeable with other representations with relative ease.

For example, instead of fireflies, other types and styles of particle effects or even animated 3D meshes could be used. It is not necessary to use humanoids.

The digital environment around the creatures could change from a forest, to any imaginable setting: underwater, in deep space, at a particular location indoors, outdoors, completely imaginary or even a real-time video feed.

INTERACTIVE ANIMATIONS

As users are tracked continuously, their movements can be displayed on-screen in almost real-time as "mimicked" gestures performed by the Firefly creatures.

These live user-gestures can be blended with pre-existing animations, which are are played either randomly or in an orchestrated manner to achieve the illusion that the creatures have "free will" and can break away from mimicking the users if they choose to. The Fireflies can also disperse from their humanoid form at random moments, adding to the unpredictability.

This method is thus used to mask eventual tracking issues, delays or problems with blending between real-time user gestures and existing animations.

If done right, the experience should provoke the uneasy, mysterious feeling that the humanoids on the screen are alive and that they see you.

SUMMARY

● The use case is tailored to the new technology developed by the AI-company and targets a showcase environment in which up to 5 Firefly Creatures can be generated at the same time.

● The enchanting visual environment displayed on large screens and the interactability of the Firefly Creatures is intended to generate intrigue and draw audiences, even if they do not want to interact directly.

● Their visual representations are designed to be vague enough to accommodate the limitations of the tracking system, and to mask technical issues.

● Positional tracking is demonstrated by the placement of the Firefly Creatures in relation to the user.

● The interaction does not require any tutorial or monitoring by staff as the creatures are triggered automatically when users walk into the floor space.

● As this is unproven technology and an unproved use case, quantitative and qualitative user tests must be performed early on to uncover problems with the design concept as well as the implementation.